When Jared Ellis saw ChatGPT's early responses, most support leaders were planning cautious pilots. Ellis went the opposite direction, he rolled out AI to 100% of Culture Amp's support interactions within months. But speed wasn't recklessness: it was comprehensive safety systems that enabled velocity.

Ellis leads customer-facing support at Culture Amp, an employee experience platform that helps companies measure engagement, professional development, and performance. He joined as the company's first support specialist when Culture Amp was just over 100 people, building the support function from scratch and defining how it would serve HR professionals using the platform.

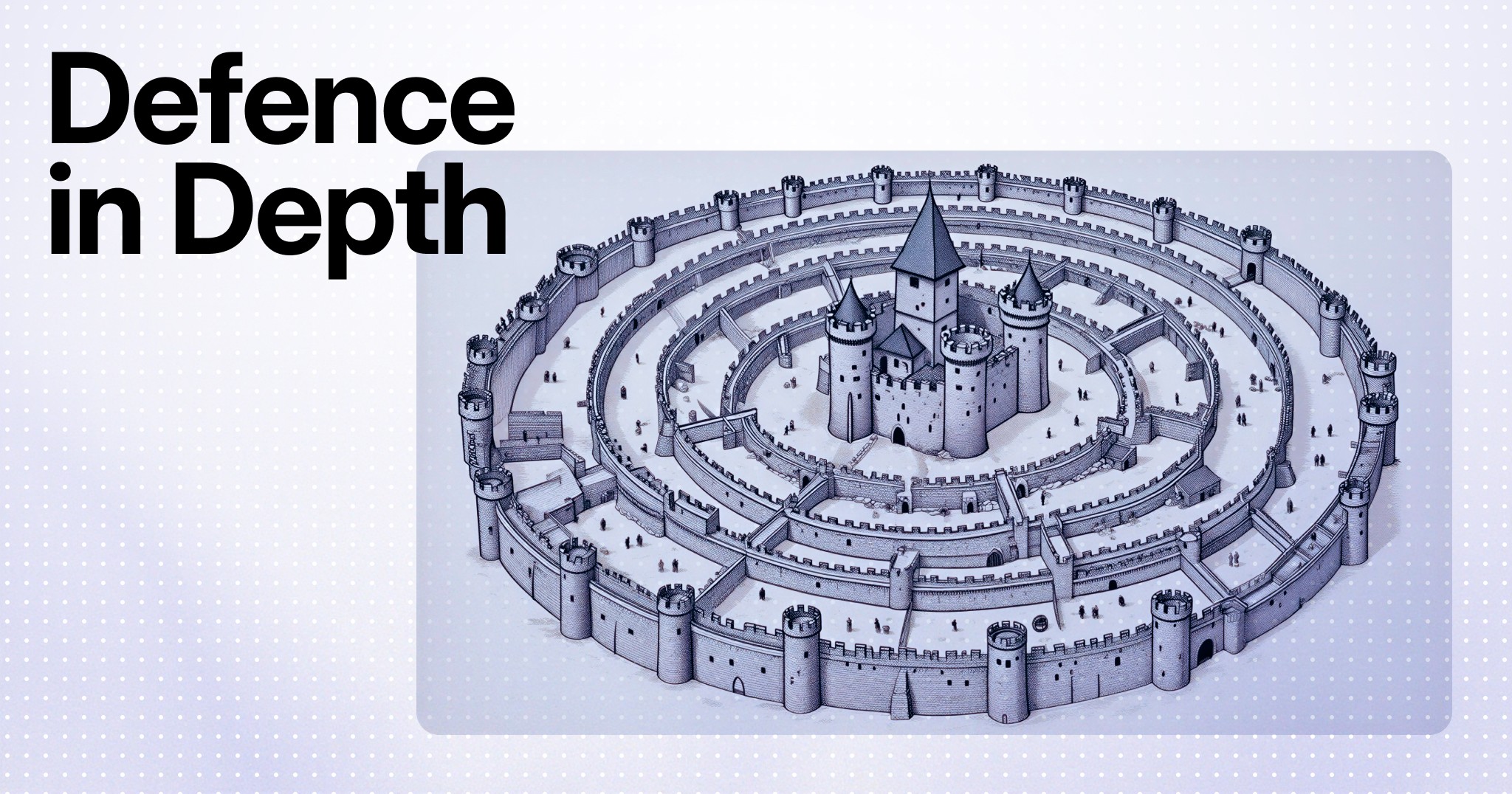

The counterintuitive insight: The biggest obstacle to responsible AI adoption isn't moving too fast, it's moving too slowly to build the safeguards that make speed safe. While conventional wisdom says to test AI incrementally on low-risk questions, Ellis discovered that limited deployment prevents you from learning where your safety systems actually need reinforcement. The solution isn't going slow, it's investing heavily in compliance mechanisms and escape hatches that let you move confidently.

Why Safety-First Doesn't Mean Slow

Ellis didn't advocate for moving fast and breaking things. He advocated for building comprehensive safety infrastructure that makes fast learning possible without customer harm.

The core principle: you can't learn where AI will genuinely struggle until you expose it to real complexity, but you need protection systems in place first. "I'm going to go all in, but I have the escape hatches really well planned out," Ellis told leadership. Those escape hatches weren't afterthoughts, they were the entire foundation that justified aggressive deployment.

Culture Amp implemented human oversight watching initial AI responses in real-time, ready to intervene immediately. They configured automatic ejection for compliance-sensitive conversations when specific keywords appeared. Every major support platform supports this "if it says X, do Y" routing but Culture Amp used it as their safety net before expanding AI's reach.

The Compliance-Aware Escalation Framework

Every conversation that escalates from AI to a human specialist triggers a mandatory learning loop, not just for quality, but for safety. The specialist helps the customer while documenting "what was missing, why AI couldn't help in this instance."

This creates continuous monitoring without reviewing every interaction manually. If AI escalates a conversation, that's a signal something potentially went wrong. The escalation becomes both the safety catch and the learning mechanism.

Critically, specialists don't immediately patch problems themselves. One centralized team reviews all feedback and determines which controls to adjust: documentation updates, AI training refinements, or conversation design changes. This centralization prevents conflicting instructions that could undermine safety consistency or create compliance gaps.

Ellis emphasizes the importance of understanding cascading effects: "You don't want to be constantly piling on different ways for the AI to essentially interpret something that could then conflict and actually start to give negative results." If feedback indicates AI lacked empathy, the team evaluates whether that's a systemic training issue or specific to one narrow circumstance because the wrong fix could compromise other interactions.

Building Trust Through Gradual Oversight Reduction

Culture Amp didn't maintain the same safety controls forever but they reduced them methodically based on evidence, not optimism.

"This last couple of months has been the first time that I've had my team sit back a little bit and trust it a bit more from those escape hatches," Ellis notes, two years into their AI journey. That trust was earned through consistent performance data, mature documentation, and proven conversation design.

The team still maintains automatic ejection triggers for sensitive topics indefinitely. Some safety rails never come down, they're permanent fixtures that protect customers and the business from compliance risks.

Measuring Responsible Resolution, Not Just Deflection

Ellis stopped chasing first response time — AI made it essentially zero — and pivoted to metrics that reflect both quality and care. He tracks what he calls "rate of automated resolution," deliberately reframing from "deflection rate."

The distinction matters deeply. Deflection implies pushing customers away; automated resolution implies genuinely serving them. Modern AI tools can verify whether customers actually got problems solved versus giving up frustrated — a critical measurement for responsible deployment.

This measurement philosophy extends to distinguishing content quality from policy dissatisfaction. AI can identify when "the customer was understood and given a correct answer" versus "the customer didn't like that answer" —ensuring the team doesn't mistake policy feedback for service failures while capturing genuine issues that need attention.

The Quality Mandate That AI Enables

"The biggest fundamental shift for me during the introduction of AI has been that I get to focus more on quality, which is what I really cared about the most," Ellis explains.

With volume constraints eliminated by AI, his team obsesses over excellence in complex human interactions. This isn't about efficiency for efficiency's sake — it's about ensuring that when customers need human expertise, they receive genuinely thoughtful, careful support.

Aggressive AI deployment created space for more careful, attentive human work. But only because the foundation was safety systems comprehensive enough to handle scale without compromising care.