When it comes to voice as a channel, most AI CX companies are solving the wrong problem. They're obsessed with making bots sound human – getting the "ums" just right, shaving milliseconds off response times, perfecting the cadence.

Making AI agents sound natural, expressive and conversational is clearly important, and something we care deeply about at Lorikeet. But here's what really matters. When someone calls support, their card just got declined at the grocery store. Their medication hasn't arrived. Their account is locked and payroll is tomorrow. They don't care if your bot sounds like Morgan Freeman. They care if it can fix their problem while they are on the line.

Voice isn't chat with a microphone

Much of the industry treats voice like it's text support with speech-to-text-to-speech bolted on. It's much higher stakes than that.

When you're on a call, the conversation never stops. You can't hit pause to look something up. You can't hide behind a loading spinner. You can't say "let me check" and take 30 seconds to figure out what's happening.

Everything happens live. The customer's talking, you're processing, you're searching knowledge bases, pulling account data, making API calls. All simultaneously. One too many awkward pauses and they're asking for a human.

The five strands that actually matter

After thousands of hours of customer calls, we've identified what makes voice AI work. Not in theory but in production.

Clarity: Understanding before acting

A customer says "my card isn't working." Which card? Credit or debit? What kind of "not working" – declined, physically damaged, expired? Most bots guess wrong and waste everyone's time. Concierge asks for clarification and listens actively (those subtle "mm-hmm" signals that show you're following along) to build a model of the issue. It also updates its hypotheses, in real-time, based on the new information that is provided.

Agents need to balance clarifying questions with a bias to action i.e. knowing when to ask clarifying questions versus when it has enough context to proceed. It's the difference between helpful and annoying.

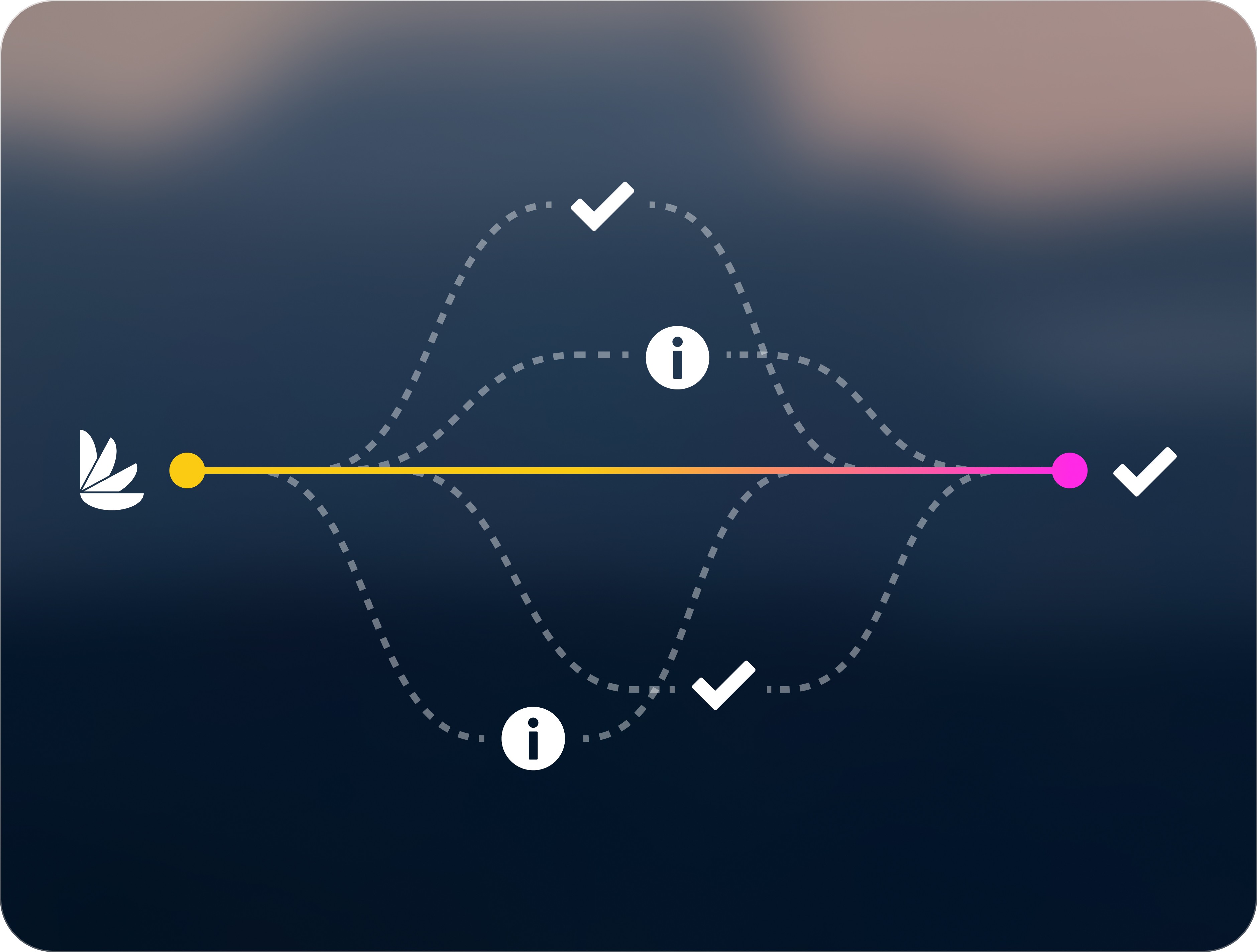

Continuity: Elegant context-switching

Humans don't talk in straight lines. They'll start explaining their billing issue, remember something about their last order, then jump back to the original problem. Three minutes later they'll reference something from the beginning of the call.

Your AI needs to maintain that entire context while processing new information in real-time. It also needs to handle interruptions, corrections and callbacks – just like a human agent would – and preserve context across multiple channels (voice, text, chat and email).

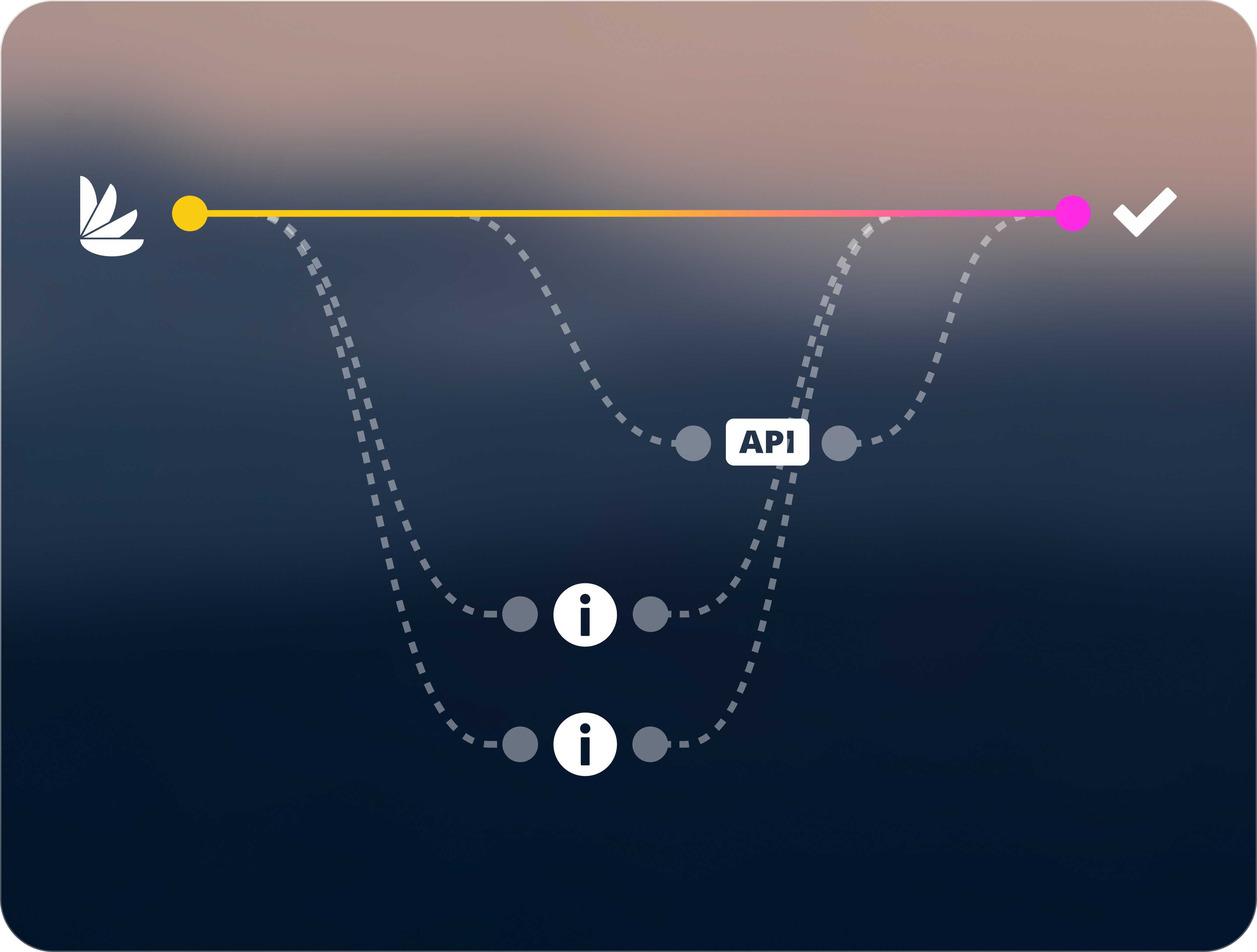

Progress: Active knowledge utilization

While the customer's explaining their problem, your agent needs to be searching documentation, checking their account history, spawning more support agents and identifying which systems to query – without stopping the conversation. With that additional knowledge and context, it needs to assemble actionable solutions in real-time.

We've seen competitors' bots say "let me look that up for you" followed by 10 seconds of silence. To give the perception of intelligence means parallel processing. Searching while listening. Understanding while responding.

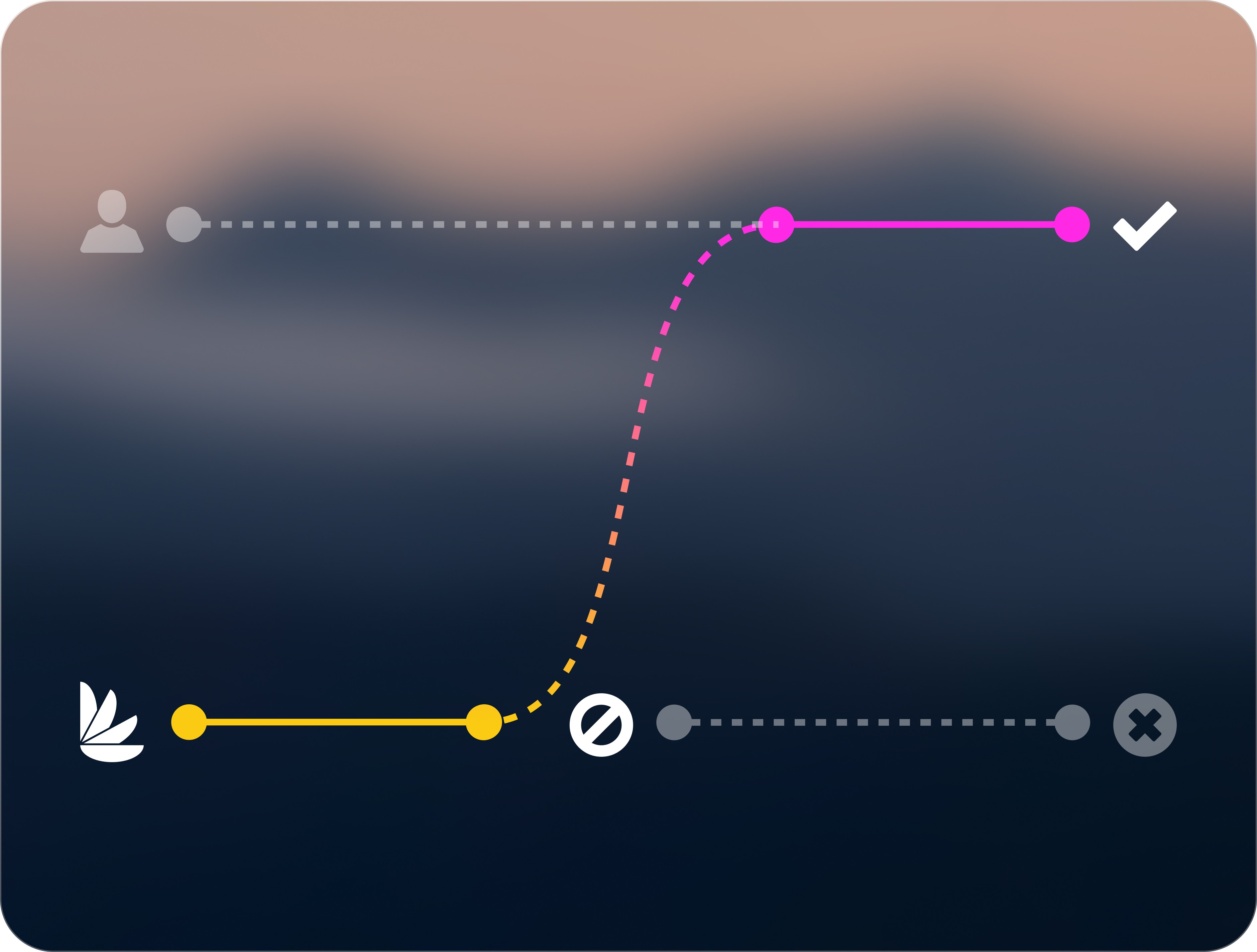

Decisiveness: Proactive, pragmatic judgement

Voice agents need to be able to plan pre-emptive actions based on the context and execute multiple simultaneous actions in a decisive manner. But something that most vendors overlook is that the the best voice agents know when to tap out.

They recognize when they're out of their depth before the customer gets frustrated. They understand emotional cues that signal someone needs human empathy, not efficient problem-solving. They know the difference between "I can handle this" and "I'm about to make things worse."

The problem is, competitors are so seduced by their own voice tech that they'd rather keep customers talking than actually solve their problems. The issue is primary. The voice is secondary. Always.

Humanity: Built-in empathy and imperfection

Customers aren't stupid. Even with improvements in the tech they usually know they're talking to AI. The uncanny valley effect of fake empathy makes things worse, not better.

What works? Background office noise – it sounds real because it is real. Natural disfluencies that show processing, not pretense. Speaking at the right level for your customers and the context of their issue. That might mean sixth-grade reading level for healthcare (that's the accessibility standard) or technical for crypto customers who expect you to know the lingo.

Stop trying to fool people. Start trying to help them.

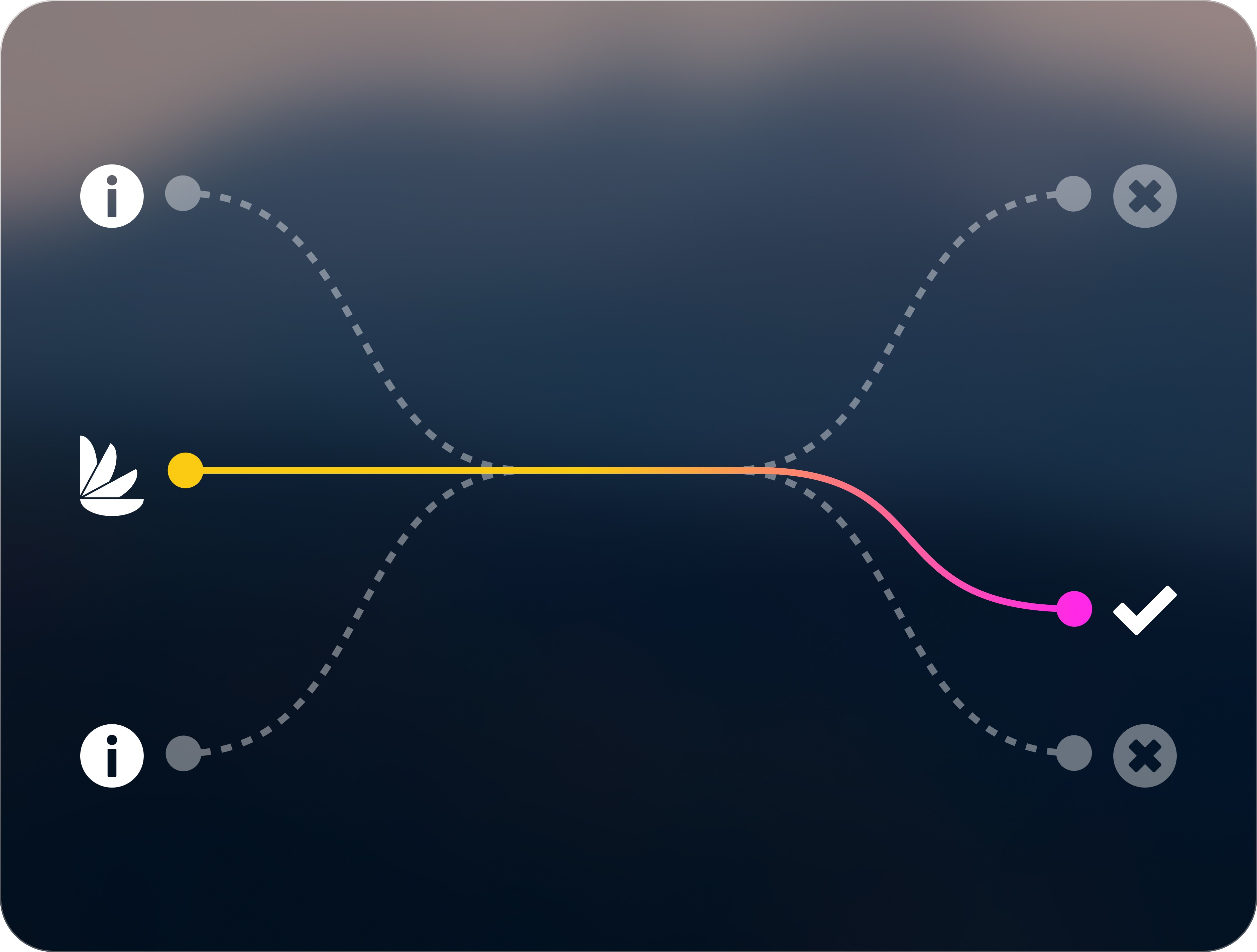

The part everyone's missing

While your customer's talking, your agent should be doing things. Actually doing them, not just talking about them.

Customer disputes a charge? Your agent calls the merchant while they're on the line. Need to book a specialist appointment? Your agent checks availability and sends the confirmation while explaining coverage. Account locked? Your agent texts a verification code while walking through next steps.

This is what we mean by multi-channel orchestration. Not "omnichannel presence". That's table stakes and usually marketing speak for "we have a chat widget." We mean actually using every channel simultaneously to solve problems faster.

Our agents send emails, texts, and make calls to third parties, while maintaining a natural conversation with your customer. That's the difference between voice AI that sounds good and voice AI that works.

What this means for you

Latency benchmarks are worthless if your bot can't solve problems. A human sounding agent doesn't matter if customers hang up in frustration.

Start with your hardest problems. If your AI can handle someone whose card was just declined with rent due tomorrow, everything else is easy. If it can't, you're building an expensive virtual receptionist.

The economics change when AI actually works. Not when it deflects 80% of tickets to leave customers frustrated. When it resolves 80% completely. When your handle time drops not because calls are shorter, but because they don't need callbacks.

The bottom line

Voice AI that works isn't just about perfect imitation. It's about genuine capability. The five strands – clarity, continuity, progress, decisiveness, and humanity – work together, not in isolation.

We built Lorikeet around this reality. Not the promise of what voice AI could be, but what it needs to be today. It's live with real customers, handling real problems, right now.

The question isn't whether AI can sound human. It's whether AI can help humans. Everything else is just noise.